Choosing your container environment on AWS with Lightsail, ECS, EKS, Lambda, and Fargate

Back in 2017 I wrote an article about choosing your container environment on AWS. AWS Fargate and Amazon Elastic Kubernetes Service had just launched at AWS re:Invent, joining the preexisting Amazon Elastic Container Service. My original article was extremely popular as folks were trying to decide between the new choices available to them.

Since AWS re:Invent 2020 added a couple new options for running containers, we need to take another look at the options available today.

Picking an orchestrator

Your application container is built and running locally on your dev machine. But you want to get it running in the cloud so that other people can access your application.

The first thing you need to do is pick a container orchestrator. The container orchestrator is a tool that automatically starts copies of your container in the cloud. The orchestrator watches over your application container and restarts it if it crashes, so that your application stays online. If you need to update your application then the orchestrator rolls out updates, and as your traffic grows and shrinks the orchestrator can adjust the number of containers up and down.

There are four core options for orchestrating containers on AWS.

Amazon Lightsail Container Services are ideal if you are new to the cloud, and just want to deploy a container on the internet without dealing with a whole lot of complexity. Lightsail has a simplified API that abstracts away many of the underlying AWS concepts. This makes Lightsail ideal for those that just want to focus on coding their application.

When you launch a Lightsail container service it creates AWS cloud resources that are hidden away in a fully managed “shadow” account. You can benefit from the power of AWS services without needing to ever see or touch them directly.

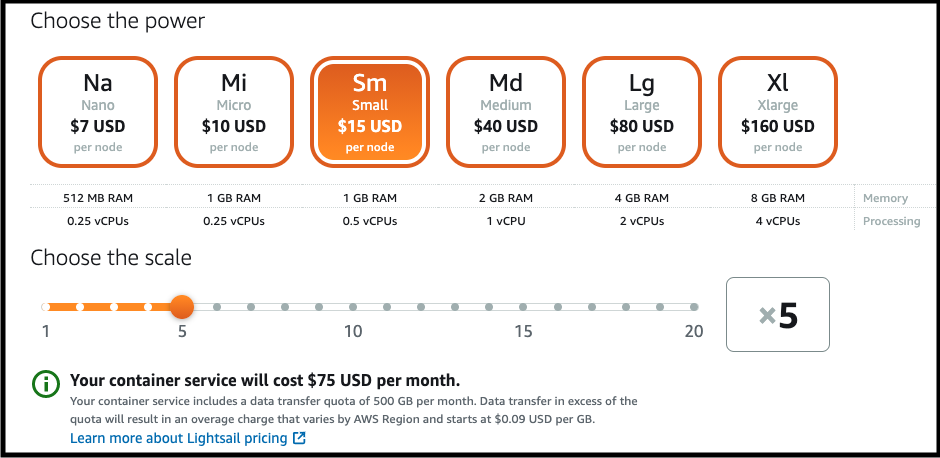

One benefit of Lightsail for small workloads is its focus on low, predictable cost deployments. The billing model is simplified and gives you a clear preview of how much it will cost you. The smallest container deployment currently costs $7 a month. You can easily scale up the node size as well as the node count as your traffic grows.

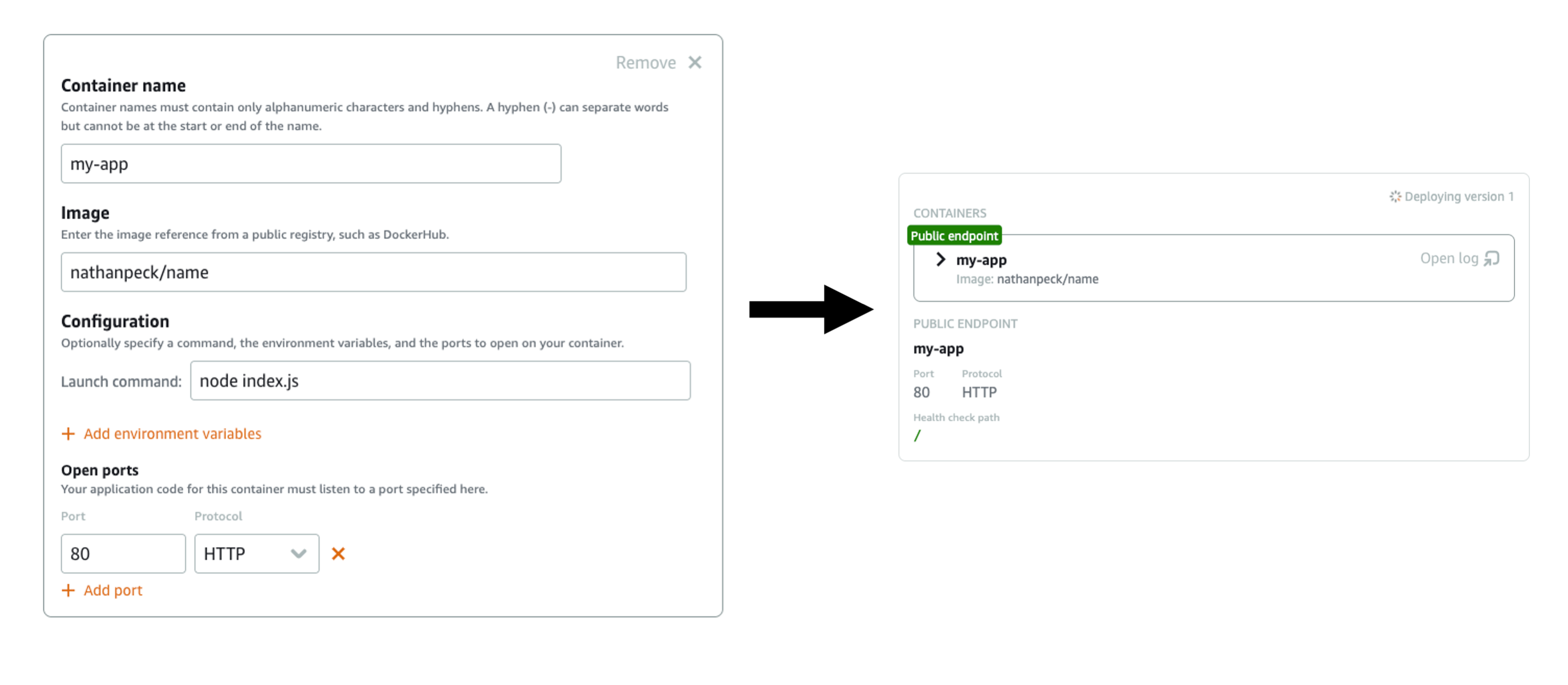

Lightsail containers greatly simplifies deployments. All you need is your container image URL, and the port that the container expects traffic on. You can optionally add environment variables and other settings.

Lightsail gives you a URL for your application and you can add your own custom domain alias to point your own domain name at the Lightsail container service.

To learn more about Lightsail Containers:

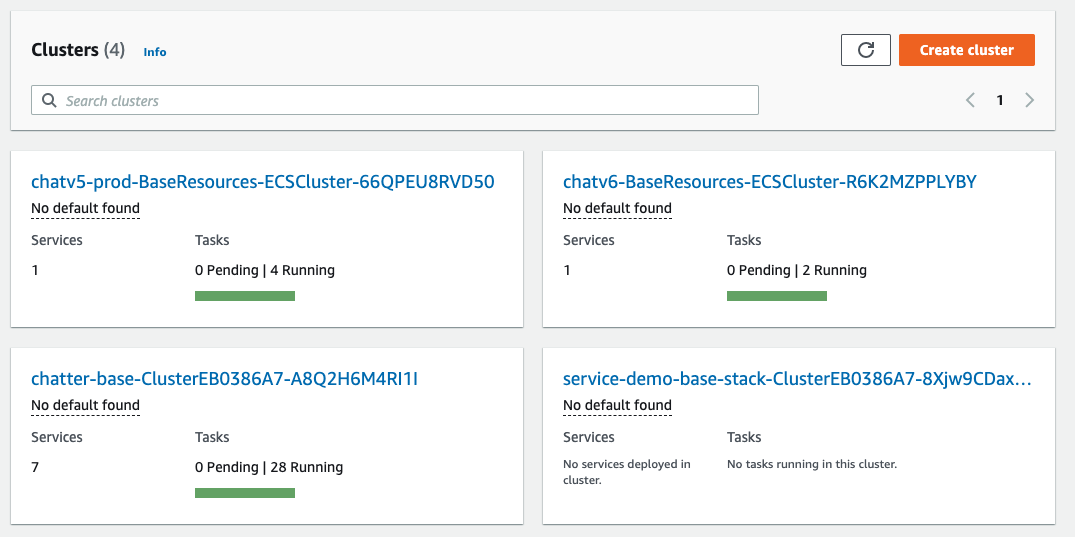

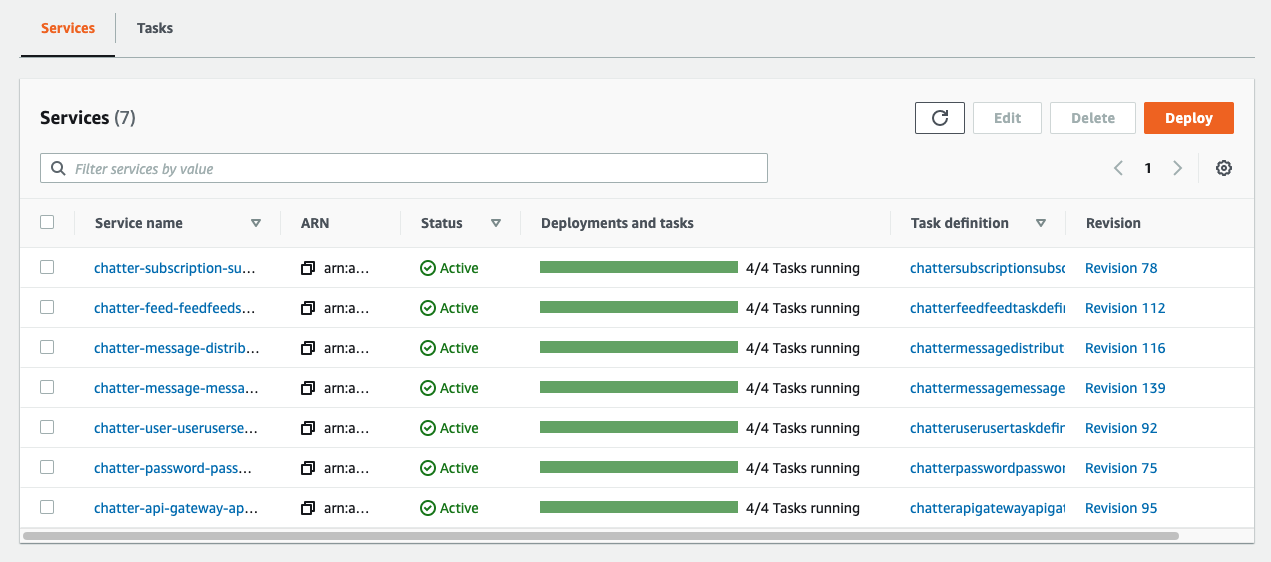

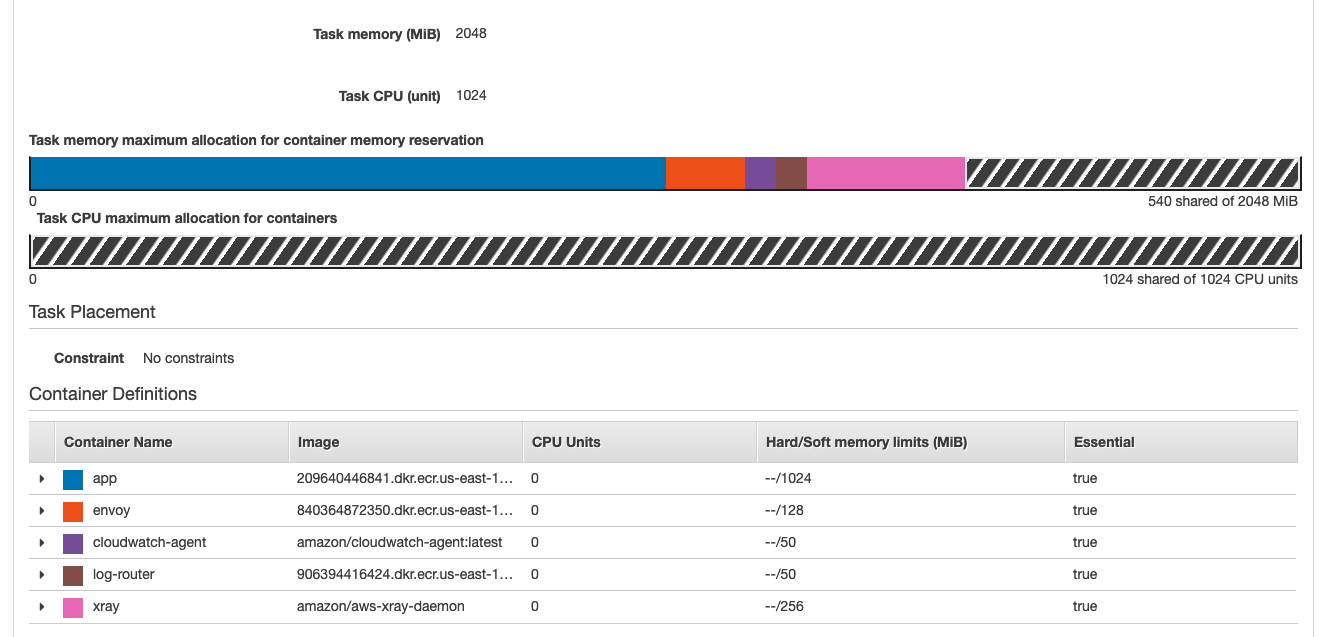

Amazon Elastic Container Service (ECS) is the underlying service that powers Lightsail. If your application is more complex you may prefer to use ECS directly. When using ECS directly there are a lot more features you can experiment with, but there is also a lot more power for complex applications.

Amazon ECS has more integrations with other AWS services than Lightsail does. For web facing services you can use a variety of web facing ingresses, such as Application Load Balancer, Network Load Balancer, and API Gateway HTTP APIs. You can also use AWS App Mesh as a service mesh for networking and ingress.

If you have stateful data then ECS integrates with Elastic File System for storing stateful data and ensuring that it can be accessed no matter what EC2 instance your container gets place onto.

If you are doing machine learning then ECS can schedule and pin workloads to GPU cores and it integrates with Amazon Elastic Inference.

There are a number of tools that you can use to deploy with Amazon ECS. The best tool to start with is AWS Copilot:

Copilot is designed for developers. You use it from the command line to build, push, and deploy your application container without having to touch a web console. Behind the scenes it generates infrastructure as code definitions for your environment and application. Copilot can even create automated release pipelines that connect to your Git repo and deploy a new version of your application whenever you push code to your repository.

By default Copilot helps you to deploy through ECS onto AWS Fargate. However you can also use ECS with your own pool of EC2 instances, or even with on-premises self-managed machines via ECS Anywhere.

To learn more about Amazon ECS:

- Amazon ECS Workshop - This workshop guides you through your first microservice deployment.

- AWS Copilot - The documentation for AWS Copilot, with its own getting started guide.

- Awesome ECS - A curated list of ECS resources to learn from.

Amazon Elastic Kubernetes Service is the best way to run Kubernetes on AWS. Kubernetes is an open source container orchestrator that is focused on portability and giving you the most control over your infrastructure. In addition to running Kubernetes in the cloud you can also run a lightweight version of Kubernetes locally using kind, or an even more lightweight Kubernetes API compatible version called k3s. Amazon EKS is upstream Kubernetes, patched and managed automatically so you can focus more on your application.

Kubernetes comes out of the box with a basic set of built-in resources that can be used to deploy most containerized applications. These Kubernetes resources abstract the underlying cloud resources such as load balancers and networking. Similarly to Amazon Lightsail you can deploy infrastructure using the Kubernetes API without touching AWS resources directly. However there is no fully managed “shadow account” as there is with Lightsail, so AWS resources that are created by Kubernetes are still visible on your AWS account and are still your responsibility.

If you have more specific needs that can’t be met by the default Kubernetes functionality you can also install a variety of custom resources that further extend Kubernetes capabilities. For example the aws-alb-ingress-controller extends Kubernetes with the ability to create AWS Application Load Balancers and automatically register containers in them. There are container storage interface providers that extend Kubernetes with the ability to store stateful information in Amazon EBS and Amazon EFS.

In general Kubernetes has all the same capabilities as Amazon ECS, though some require installing the right extensions. Fortunately, installing custom resources to extend Kubernetes is generally a painless and easy process thanks to the tireless work of the massive open source community that has embraced Kubernetes.

There are a number of tools for getting started with Amazon EKS. For setting up Amazon EKS most developers use eksctl CLI tool:

Once your cluster is ready to use you can use kubectl to deploy applications on the Kubernetes cluster.

To learn more about Amazon EKS:

- Amazon EKS Workshop - This extensive workshop helps you setup an Amazon EKS cluster and teaches you many of the core concepts of deploying applications in Kubernetes.

- eksctl - The CLI for creating an Amazon EKS cluster

- AWS Controllers for Kubernetes - This open source project adds custom resources to Kubernetes that allow it to create and manage AWS resources like S3 buckets, databases, and queues.

AWS Lambda supports container images, making it a great way to create a serverless container deployment, particularly when you are sensitive to the cost of running your application. AWS Lambda requires that you make some minor changes to how you work with your containers and the code inside the container, but it is fully possible to create a container that works in both AWS Lambda and a traditional container orchestrator like Amazon ECS or Amazon EKS.

The benefit of AWS Lambda is that it only charges you when your container is actually executing code, and it bills with millisecond granularity. AWS Lambda also comes with a generous free tier of 1 million free requests per month and 400,000 GB-seconds of compute time per month. This means for many small workloads it might be completely free. Even if you exceeded the free tier AWS Lambda is very efficient, since it only charges you when you are actually serving traffic.

AWS Lambda is the best choice for event driven architectures that execute periodically rather than running all the time. If you are running a higher trafficked web service that receives enough requests to keep the application constantly busy then you may prefer Amazon Lightsail, Amazon ECS, or Amazon EKS. But for a significant number of workloads you will find that AWS Lambda is the ideal way to run your containerized application.

To learn more about AWS Lambda + container images:

- Read the launch blog for AWS Lambda container image support.

- Check out the tutorial from A Cloud Guru.

More AWS container concepts

The orchestrator you choose is going to be the main interface you deal with when defining and controlling your container deployment. However there are a few more pieces and tools that you should be aware of. These AWS services operate in support of your container deployments on AWS. Some of them may not be relevant to your needs, but you should know they exist just in case.

<img src="/images/posts/choosing-container-on-aws-in-2021/aws-fargate-logo.png" style=‘float: left; width: 20%; margin-right: 5%; margin-bottom: 20px;’’ />

AWS Fargate is serverless compute for containers. Think of it as the hosting layer that runs your container for you. You still use Amazon ECS or Amazon EKS as the API to define your deployment, but the container itself runs on AWS Fargate. The advantage of this is that you no longer need to worry about EC2 instances. You don’t need to manage EC2 capacity, patch and update EC2 instances, or update the agents that ECS and Kubernetes use to control those EC2 instances. The pricing model for AWS Fargate is based on the size of the container and how long it runs for.

Amazon ECR is a registry that stores your container image right there in the AWS data center where you plan to run it. It supports private images and also has a public image registry at gallery.ecr.aws. The public image registry has a generous free tier. Both the public and private registries are highly scalable and replicated, helping you to get container images onto hosts as quickly as possible inside AWS.

AWS Proton is a tool for platform builders. If you are part of a large organization that has many services and many teams you often reach a point where you want to build your own platform on top of AWS. AWS Proton lets infrastructure experts design and distribute prebuilt templates for creating environments and applications, and then application developer teams can reuse these templates to deploy their own containerized applications. Later the platform team can update these centrally managed templates in order to update all the applications that were deployed using the template.

AWS Cloud Development Kit is a framework for creating infrastructure using familiar application programming languages. It includes a toolkit for building containerized deployments on ECS, called ecs-service-extensions. For EKS there is also CDK8s and CDK8s+ for connecting to a Kubernetes cluster and creating resources through the Kubernetes API. AWS CDK is amazing at empowering your developers to create their own infrastructure. It can also be used similarly to AWS Proton, empowering a centralized platform team to create reusable infrastructure constructs that other teams can build on top of.

Conclusion

The first step to deploying a containerized application is to choose which service you would like to use as the main interface to manage your deployments. There are four core options:

- Amazon Lightsail Container Services

- Amazon Elastic Container Service

- Amazon Elastic Kubernetes Service

- AWS Lambda with container images.

Hopefully this article has helped you to understand the differences between these four choices.

If you have any questions about AWS container services that you’d like answered, please reach out to @nathankpeck on LinkedIn